Machine learning models are only as good as the data you use to train them. AI sexism and racist machines have made the news in 2017.

Yesterday, Slava Akhmechet tweeted about his word2vec language model test. According to the tweet, the test was trained on the Google News dataset that contains one billion words from news articles in English.

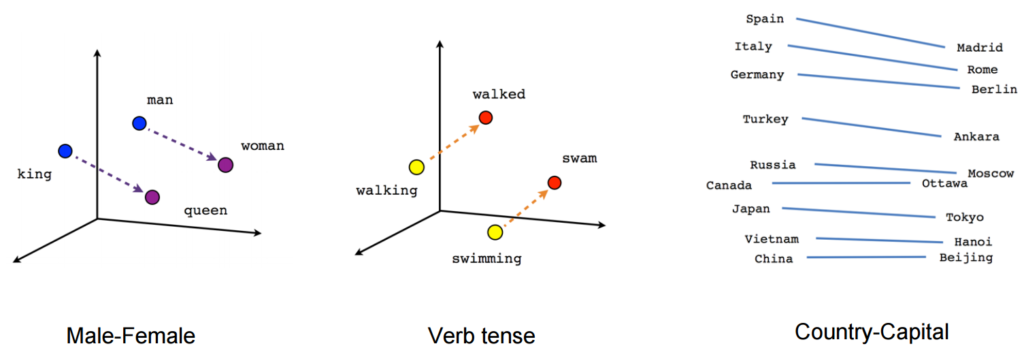

Word2vec can be used to find similar meanings between words. For example: (Man | King) would be analogous to (Woman | ________) … Can you guess? Yes, “Queen”.

However, Slava’s tweet shows that according to news text, while “he” is “persuasive”, “she” is “seductive”, and so on:

Got word2vec trained on the Google News dataset working on my laptop. Holy fuck… pic.twitter.com/1kl43gtuWi

— Slava Akhmechet (@spakhm) September 17, 2017

I’ve ran some word2vec tests in Finnish. I thought it would be interesting to see if a similar bias exists in Finnish as well. You know, Finland being one the most gender-equal countries, where we speak a language that has only gender-neutral pronouns. In Finnish, both “he” and “she” are the same word: “hän”.

Results

Finnish is more equal than English.

For example, in Finnish, both “man” and “woman” have similarity to “gynecologist” and “general practitioner”. (Top-10 similarity for woman included “nurse”, which was missing for man.)

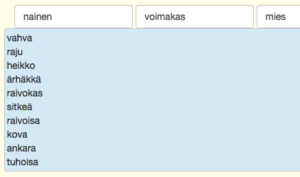

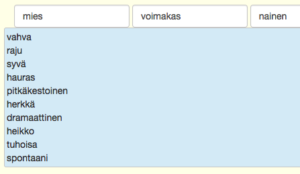

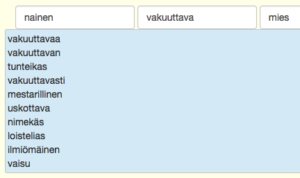

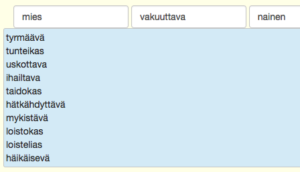

(Man+persuasive) is mostly equal to (Woman+persuasive): both are “credible”, “dashing”, “impassioned”.

Mostly equal in traffic as well. (Man+biker) has similarity score 0.54; (Woman+biker) = 0.51.

Personal qualities: (Man+credible) = 0.24 vs (Woman+credible) = 0.25 (Man+dependable) = 0.21 vs (Woman+dependable)= 0.22

It’s not all that rosy, though.

(Man+manager) = 0.24 vs (Woman+manager) = 0.17 (Man+sensitive) = 0.23 vs (Woman+sensitive) = 0.30

The training data for this word2vec model is the Finnish Internet, i.e. articles, news, discussions, and online forums in Finnish (by Turku BioNLP Group)

Pingback: Personal Semantic Search – Jussi Huotari's Web